PSNR: 31.54

# GS (M): 0.51

FPS: 435.0

PSNR: 31.54

# GS (M): 1.75

FPS: 195.1

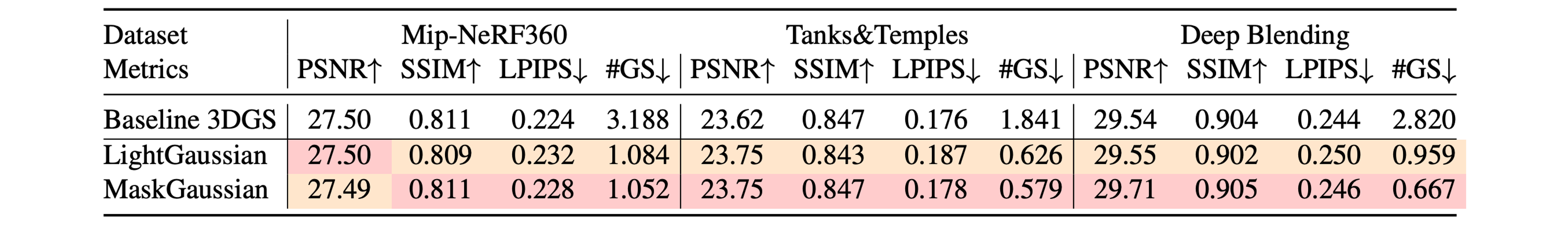

On Mip-Nerf360, Tanks & Temples, and Deep Blending.

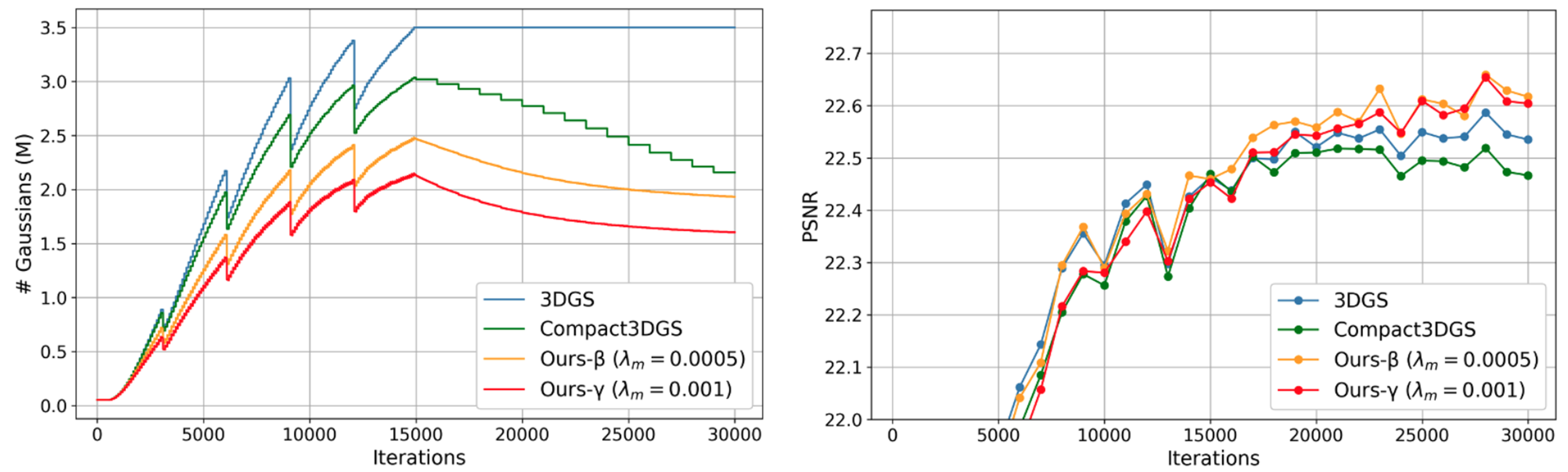

Compared to Compact3DGS, MaskGaussian progressively prunes more Gaussians, leading to faster training and lower GPU memory requirements, while retainning higher reconstruction quality.

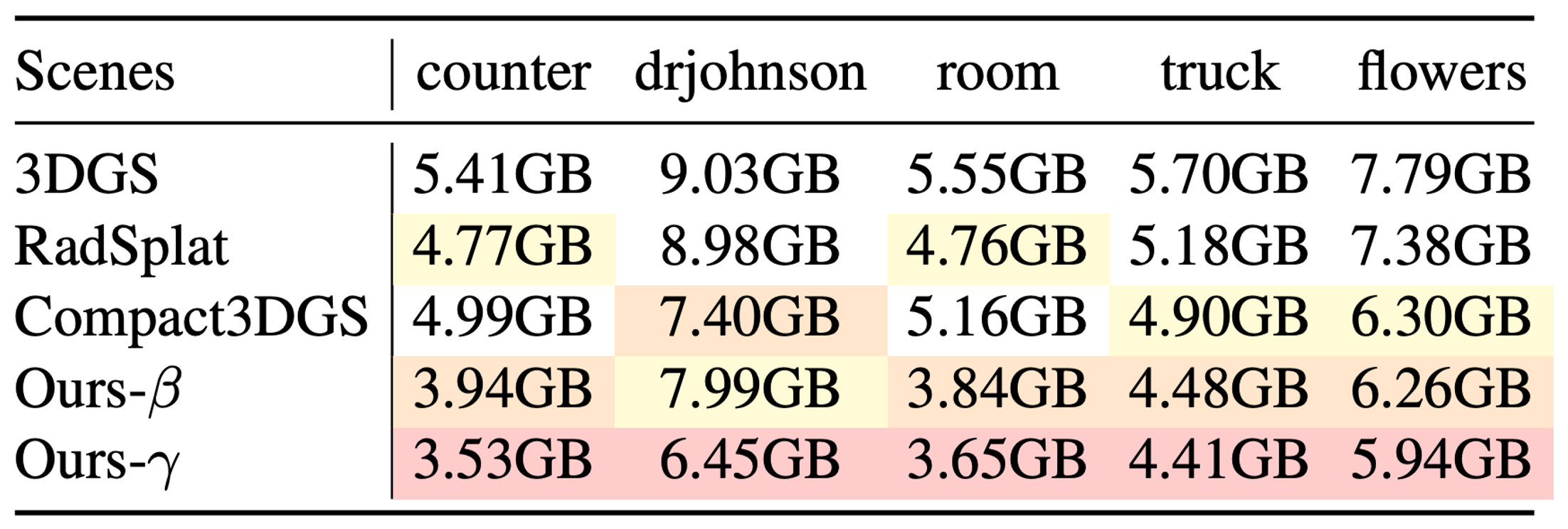

Peak GPU Memory Cost:

Although not specifically designed for post-training, MaskGaussian can be used for finetuning an already existing Gaussian scene. You can use it as a drop-in replacement for LightGaussian — you can use it with exactly the same commands, no modifications needed. Try it out with the code here!

Our method can in theory prune Gaussians for any SOTA 3DGS-pipeline. For example, we add MaskGaussian on Taming-3DGS and show that it has better efficiency than directly controlling its targeting budget.